In 2023, I am not making any resolutions. Instead, I want to focus on habits that will compound into big changes. This year, I will continue writing, but I am shifting gears slightly. I am simply shifting my focus to a new topic that is related to the concept of clean code and best practices in the field of data—DataOps. This article will be an introduction to this great field.

My First Experience with DataOps

I did not grasp the full extent of what DataOps meant until about a year ago.

I was the only data scientist, managing the data pipelines that supported all of the data requests from the company. I automated those pipelines by implementing Gitlab CICD that they would push from repository → unit tests → build and pushed to AWS’ Elastic Container Repository → AWS’ Elastic Container Service. With that automation, I was quickly able to make changes that needed to be done (mostly hotfixes), and the engineers loved that they could make those changes too! They didn’t need to wait on me to run tests or change pipelines especially when one of them was on call.

Now for the fun part, as a data scientist, I got a ton of requests for new data to pull in. I saw that I could optimize our data pipelines to handle more of the data coming in. Unfortunately, when this change was made into the repo and pushed to the ECS, everything was slowly falling apart. And I did not know.

Customers reached out about the data not showing up correctly. Queries did not finish in time. The data warehouse was not able to handle the number of files at the 5-minute cadence. Queues were piling up with data.

As it was not evident until a few weeks later, it took a dedicated team of engineers and I to figure out the problem and how to prevent it in the future. Here are a few that I learned.

Unit tests, integration tests, etc to increase coding coverage

Having an accurately represented staging instance and production instance to test changes on

Data warehouses are not intended to handle that many small files (about 2 GB per file per Kafka partition) in less than 5 minutes

Monitoring queue, execution, and completed times

Separate the queries for user content like on a website versus the analytic loads

Keep track of the statistics of the data at each step.

I learned a ton more, but I just wanted to showcase to you some of the important ones. Implementing these methods, my friends, is DataOps—the act of ensuring that your infrastructure (data pipelines, warehouses, or processes) and your data are being kept in check.

DataOps

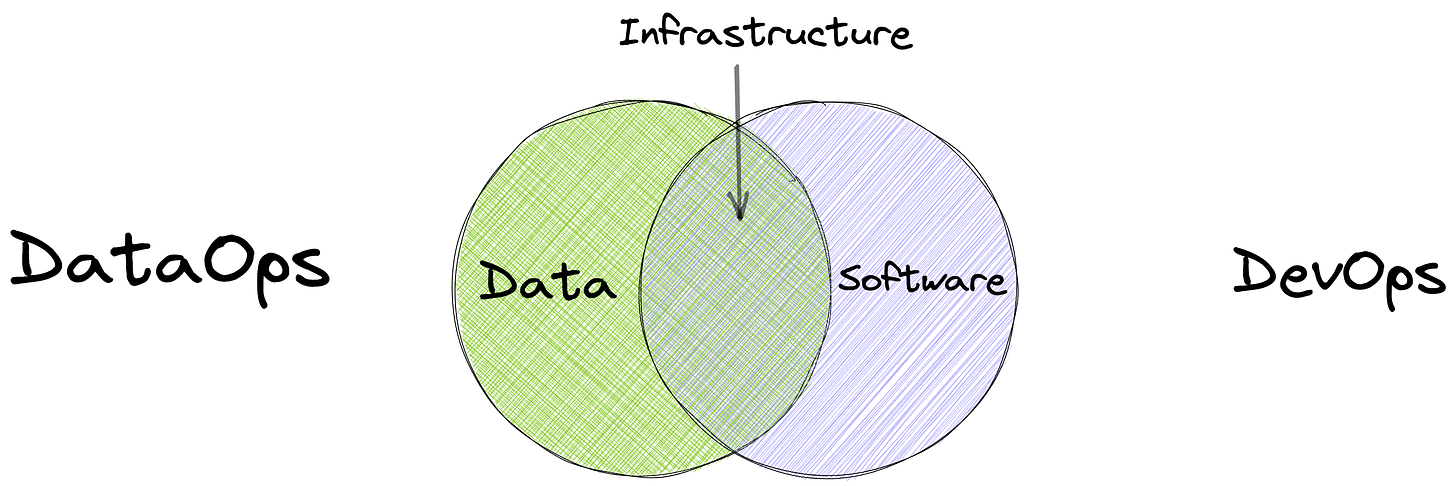

Let’s be more explicit about the definition. DataOps is a set of practices that combines software engineering and data management to improve the quality, speed, and reliability of data analytics and machine learning. It aims to bring the principles and practices of DevOps to the field of data management and analytics, in order to enable organizations to more effectively collect, process, and analyze large volumes of data.

DataOps practices include automating data pipelines, implementing testing and validation processes for data quality, and creating a culture of continuous data integration, delivery, and deployment. By adopting DataOps practices, organizations can improve the accuracy and speed of their data analytics and machine learning initiatives, and better respond to changing business needs and market conditions.

DevOps != DataOps

DevOps and DataOps are not the same things, but their relationship is symbiotic—there is a huge overlap between the two, but they are different in their own respective way.

Each area—DevOps and DataOps—requires service and infrastructure maintenance. However, each part also has its own area of expertise. In DataOps, it will be data while with DevOps it will be software.

To be exact, DevOps is to enable organizations to deliver high-quality software faster and more reliably. It seeks to eliminate silos between software development and services teams and to create a culture of continuous integration, delivery, and deployment. DevOps practices include version control, testing, and deployment automation, as well as infrastructure as code and monitoring.

Why Focus on DataOps?

It is hard to imagine the impact that DataOps has until you have a similar experience that I shared earlier, and I do hope you never have to experience that. These experiences cannot be prevented, but their impact can be minimized. Here are a few great reasons for you to focus on DataOps:

Reduced costs and stress

You are now spending less time and energy on checking your data and the pipelines. You can therefore be more proactive and prevent situations rather than continually be in reactive mode. Plus, your engineers will be much happier!

Increase speed and agility

Changes in pipelines can be easily tested and deployed. We can identify problems quicker as these changes are recorded and monitored, so we can fix them before they explode on us.

Improved data quality

We have a record of how many rows, averages of values, and other descriptive values are at various time slots. With these values, we are able to set alarms to make sure data is moving through the pipelines as expected.

Final thoughts

In this article, we covered what data ops is, how it differs from DevOps, and lastly the advantages of DataOps. This article is only the beginning as we will unlock the world of DataOps.

You really covered the gamut, and have whet my appetite for more while being incredibly concise.

I really do believe DataOps could be the shift of focus for the Data Community in 2023.

Great way to start the year!