The Importance of DataOps in Data Roles

How our roles correlate to the highest return of investment

DataOps is an essential component of data teams and should not be overlooked when building out the architecture of a data-driven organization. It is more important to focus on the implementation of DataOps than to discuss the necessity of a dedicated DataOps role. Let's take a few moments to review the various data roles and how DataOps can contribute to each of them.

Roles

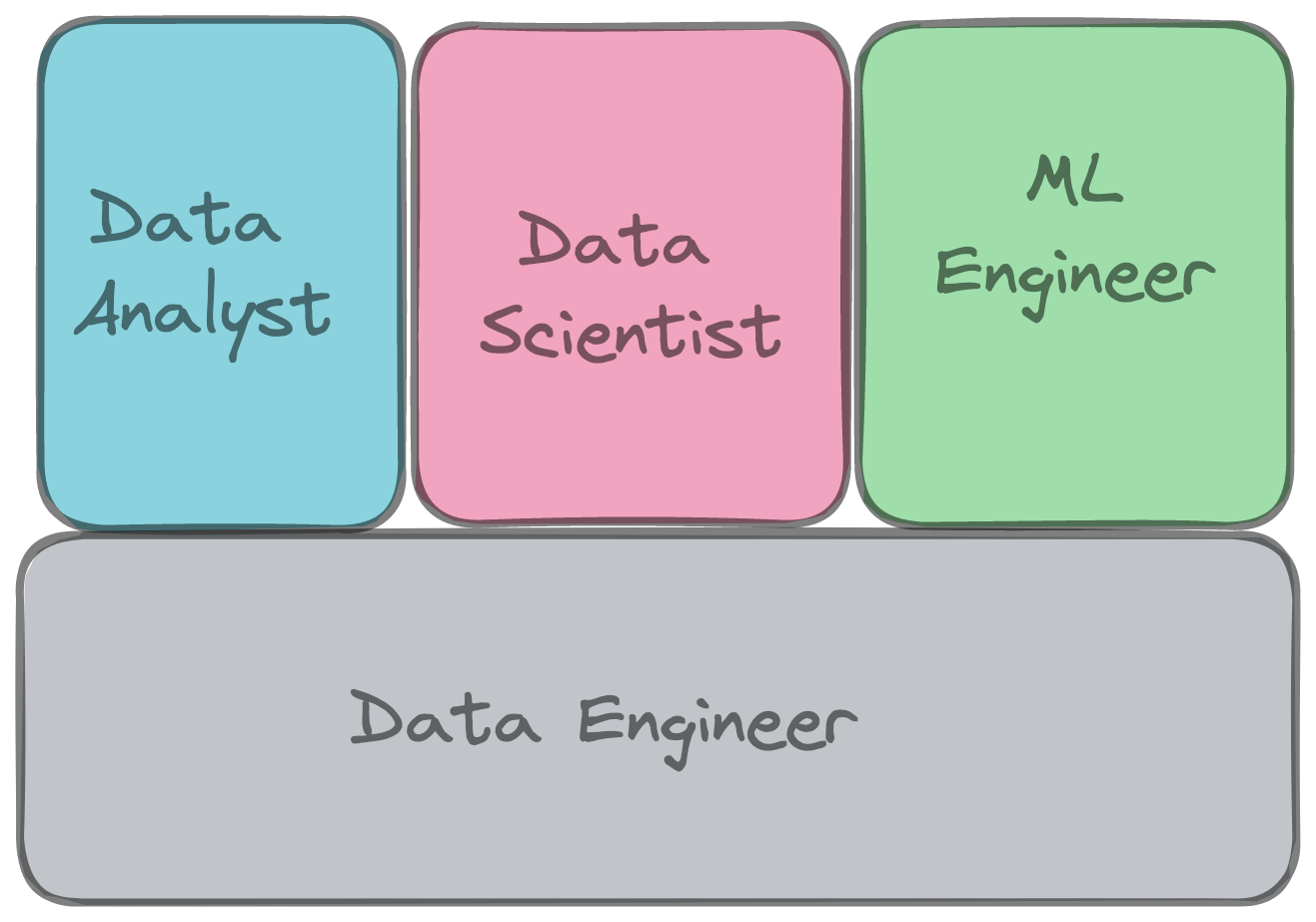

The four main roles are data engineers, data analysts, data scientists, and machine learning engineers.

Data Analyst

Data analysts are responsible for running SQL queries, utilizing spreadsheets, and utilizing various BI tools to analyze data. They are domain experts in the data they work with and become familiar with data definitions, characteristics, and quality problems. Their downstream customers are business users, management, and executives.

Data Scientists

Data scientists build models to make predictions and recommendations, which are then evaluated on live data to provide value in various ways, such as automated actions, product recommendations, and economic predictions.

Machine learning engineer

Machine learning engineers are expected to design, develop, test, and implement machine learning algorithms. Additionally, they must be able to evaluate the performance of their models, make changes and improvements, and ensure that they are correctly deployed into production.

Data Engineer

Data engineers are responsible for managing the entire data engineering process, from gathering data from source systems to providing data for use cases such as analytics and machine learning.

The Wake of DataOps in Data Engineering

Data engineers obviously do data engineering as how I had defined it above. However, what does data engineering actually mean?

In “Fundamentals of Data Engineer,”

and Matt Housley describes data engineering as "the intersection of security, data management, DataOps, data architecture, orchestration, and software engineering," suggesting that DataOps plays a critical role in the whole data engineering process.I would go as far to say that it is not just a partition of data engineering, but rather a crucial undercurrent that requires dedicated attention. Without at least one member of a data engineering team solely focused on DataOps, the data team is likely to face many difficulties and become less efficient.

Let’s dive deeper into how this happens.

Data Team’s Integration of DataOps

DataOps plays an important role in providing a unified system that provides a reliable and secure service, while also reducing the complexity of data management.

By this definition, data engineers would be most impacted by DataOps. Data engineers are often tasked with building pipelines and maintaining the underlining services that holds up data infrastructure. As we all know, things fail. All the time.

“Everything fails, all the time.”

Werner Vogels, CTO of Amazon Web Services

When these pipelines and data infrastructure fails, the rest of the team is negatively affected too.

Regardless of what role, DataOps needs to be an integral part of the team, and the team is an integral part of DataOps.

We will examine the approach used by Christopher Bergh, CEO of DataKitchen, to assist teams in implementing DataOps.

DataOps Principles

DataKitchen uncovered a better way to develop and deliver analytics. Here are the 18 DataOps principles.

Continually satisfy your customer

Our top priority is to make the customer happy by giving them helpful analytic information quickly, from just a few minutes to several weeks.

Value working analytics

We think the main way to measure data analytics success is how well insightful analytics are delivered with accurate data on strong systems and frameworks.

Embrace change

We like when customers change their needs and use it to get ahead of the competition. Talking to customers directly is the best way to communicate with them quickly, effectively, and flexibly.

It’s a team sport

Analytic teams will always have different roles, abilities, preferred tools, and names. Having a range of backgrounds and ideas helps create new ideas and get things done faster.

Daily interactions

Customers, analytics teams, and operations need to collaborate every day during the project.

Self-organize

We think that the best analysis, calculations, structures, needs, and plans come from teams that can organize themselves.

Reduce heroism

As the need for analytical thinking grows faster and wider, we think analytics teams should work to reduce the need for one person to do everything and create teams and processes that are sustainable and can grow.

Reflect

Analytic teams should check how they are doing by looking at feedback from customers, themselves, and operational data regularly.

Analytics is code

Teams that analyze data use different tools to get, combine, shape, and show data. Basically, each of these tools creates code and settings that explain the actions taken on the data to give unique insights.

Orchestrate

Orchestrating data, tools, code, environments, and the work of analytic teams from start to finish is crucial for successful analytics.

Make it reproducible

We need repeatable results, so we version everything: data, hardware and software settings, plus the code and settings for each tool in the toolchain.

Disposable environments

It is essential to reduce costs for analytics team members so they can experiment easily. We can do this by providing them with isolated, safe and disposable technical environments that match their production environment.

Simplicity

We think that focusing on technical excellence and good design can help us stay flexible; also, keeping things simple—doing the least amount of work possible—is important.

Analytics is manufacturing

Analytic pipelines are like lean manufacturing lines. DataOps focuses on processes to get better results from analytics more quickly.

Quality is paramount

Analytic pipelines should be designed to automatically detect any abnormalities or security problems with code, configuration, and data, and give ongoing feedback to operators to avoid mistakes.

Monitor quality and performance

Our aim is to keep track of performance, security and quality at all times. This will help us spot any changes and get operational stats on how we are doing.

Reuse

We think it's important to save time and be efficient in producing data insights by avoiding redoing work already done by the same person or team.

Improve cycle times

We should work to reduce the time and energy needed to turn a customer requirement into an analytics plan, make it in the development phase, release it as a regular production process, and then improve and reuse the product.

Here are the common themes in these principles:

Emphasizing the importance of individuals and their interactions in achieving goals, rather than relying solely on processes and tools.

Prioritizing the active use and analysis of data, rather than focusing on extensive documentation.

Encouraging collaboration with customers to understand their needs, rather than approaching projects with a focus on contract negotiation.

Encouraging experimentation, iteration, and continuous feedback to improve designs, rather than trying to create perfect designs from the outset.

Promoting cross-functional ownership of operations to break down silos and ensure that everyone is working towards common goals.

Final thoughts

DataOps is a way of thinking, behaving, and ultimately a shift in thinking for many of today’s data teams. It’s a new way to think about the role that data plays in your organization and how it can deliver value to your business.